|

| |

|

| |

|

|

|

|

TCHS 4O 2000 [4o's nonsense] alvinny [2] - csq - edchong jenming - joseph - law meepok - mingqi - pea pengkian [2] - qwergopot - woof xinghao - zhengyu HCJC 01S60 [understated sixzero] andy - edwin - jack jiaqi - peter - rex serena SAF 21SA khenghui - jiaming - jinrui [2] ritchie - vicknesh - zhenhao Others Lwei [2] - shaowei - website links - Alien Loves Predator BloggerSG Cute Overload! Cyanide and Happiness Daily Bunny Hamleto Hattrick Magic: The Gathering The Onion The Order of the Stick Perry Bible Fellowship PvP Online Soccernet Sluggy Freelance The Students' Sketchpad Talk Rock Talking Cock.com Tom the Dancing Bug Wikipedia Wulffmorgenthaler |

|

bert's blog v1.21 Powered by glolg Programmed with Perl 5.6.1 on Apache/1.3.27 (Red Hat Linux) best viewed at 1024 x 768 resolution on Internet Explorer 6.0+ or Mozilla Firefox 1.5+ entry views: 3529 today's page views: 1383 (11 mobile) all-time page views: 3736931 most viewed entry: 18739 views most commented entry: 14 comments number of entries: 1256 page created Sat Mar 7, 2026 02:25:55 |

|

- tagcloud - academics [70] art [8] changelog [49] current events [36] cute stuff [12] gaming [11] music [8] outings [16] philosophy [10] poetry [4] programming [15] rants [5] reviews [8] sport [37] travel [19] work [3] miscellaneous [75] |

|

- category tags - academics art changelog current events cute stuff gaming miscellaneous music outings philosophy poetry programming rants reviews sport travel work tags in total: 386 |

| ||

|

- academics - Despite my best efforts at antisocialism, in keeping with state ethos, there were still two classmates' weddings to be attended to over the weekend, which were made far more entertaining by the availability of an extremely friendly baby (also by a classmate) with a taste for table leavings (he was to be repeatedly denied) Sunday morning was then spent on a community walk-a-jog, which was all that could be managed with the route clogged by a mass of humanity. The three kilometres therefore took twenty-odd minutes, and was perhaps not even that impressive given that I had probably trekked a longer distance in total on Friday, between meetings and a slightly inaccessible hotel ballroom. After that, it was time to make arrangements for the conference trip and prepare the final version of the paper, which persistently struck me as being too simple, even accounting for familiarity. I eventually resolved to file these considerations away for next time, when a submission gets rejected (which may after all be slightly random) At the same time, Mr. Ham begged leave to facilitate "transfer of personnel", and I was certainly not about to question a hamster flanked by dozens of wild-eyed fanatics. Meanwhile, Herr Ahm has noted by email correspondence that local luminaries have indeed begun to play nice, with one MP now knowing to say that simply piling on the people is not feasible, while her boss hastened to add that integration is a two-way street, after some lag time. Not only that, the casinos and death penalty have come under review - it's almost as if they read too much into Monsieur Jambon's ramblings! Despite these promising developments, Herr Ahm was careful to reiterate that, especially in politics, talk is cheap (and intepretations, as to which verbs can be applied to which nouns, definitely inventive) - hard figures, then, should be kept in sight. He was also diplomatically non-committal on a domestic titan of investment taking out huge advertisements to proclaim loudly that it is, in fact, making money, quite sensibly pointing out that known cash-spinners like, say, Apple or Google never bother to hype up the fact.  Took time out for my sister's commencement too It was therefore left to poor Mr. Robo to handle the grunt work. Before I go into his research, I should note that perhaps the harder part is sometimes not in the doing, but in the having others understand what is being done, i.e. the teaching. Often, when reading a paper, I cannot help but ask myself whether it has bothered to explain itself in the most approachable manner, even considering page limits. Therefore, I attempt here to present Mr. Robo's results such that it is as widely accessible as possible, with as little prior knowledge or willingness to digest huge chunks of unbroken text assumed as possible. The Task Some research is theoretical, in which a model or method is proposed, and argued without reference to actual data (which to be fair may not yet be available) to be a good way of describing or handling a problem for which demand may or may not be existent. This is not that type of research. I, together with Mr. Robo, and so says himself Mr. Ham, have been striving to make computers read embellished or distorted words known informally as CAPTCHAs for awhile now; a simple (mostly-)working template-matching system for input with lines struck through was detailed last November. More recently, analysis has been done on 2000 independent samples of the industrial-strength Google CAPTCHA this May, with contributions by several laudable anonymous volunteers. Finally, a follow-up post last month described how the distortions used may be produced, and perhaps reversed. Today's offerings are in a way a step back, but a necessary one; at the end, a CAPTCHA/word recognition system depends on being able to recognize individual characters (here, we ignore theoretically-possible but in practice less-seen holistic paradigms). Naturally, the success of the system rests heavily on how accurate these individual diagnoses are.  Mr. Robo's current workflow (Diagram by Dia) But isn't learning this trivial, given how small text images can be? Not necessarily, as even a mere 20x20 image produces an input space of 400 dimensions, with 2400 possible configurations (far more with floating point representation), which as the chessboard story says, is already unimaginably huge. This is the famous curse of dimensionality issue in machine learning, basically the intuition that each additional dimension exponentially increases the number of options.  Example in just two dimensions, ten samples per class; A possible SVM classification dividing plane is in black, the nearest-neighbour prediction is shaded in the background. Knowledge about the green region is especially thin. Thankfully, this is often not intractable in practice, as we shall see. Additionally, there are at least two often-useful basic strategies to mitigate it - either to generate more data to cover more of the space with confidence, or to inversely map test data such that it conforms to a smaller effective space.  Left: Generating more data; blue arrows are transformation functions Right: Normalizing data; red arrows are inverse functions In the case of text recognition, the first strategy would involve deforming some family of characters, which has the advantage of being easy if the scope of possible deformations can be estimated; however, this does have the drawback of generating larger (and slower) models. The other strategy, inverse transformation, is then especially attractive as it would simplify the model. However, as it is not easy to decide on an inverse without having some idea of what the character actually is (in which case the character recognition problem would already have been solved), usage tends to be limited to very simple universal adjustments, such as deskewing.  Perfect recognition accuracy can be achieved - true or false? (Source: Somewhere on the Internet) The Background When attempting something, it is usually proper (if sometimes ego-dampening) to examine what those greater than oneself have tried in the past. One of the cardinal measures in the field of character recognition is the MNIST database of handwritten digits, used by LeCun in 1998 to demonstrate the efficacy of neural networks in this respect. While the dataset is available in text format, Mr. Robo availed himself of Roweis' convenient and easily-visualized JPEG conversion. Just as importantly, the MNIST database page has been extremely well-maintained (not the norm for academic research), and lists the results of numerous approaches, the best of which currently achieves a mere 0.23% error rate as opposed to the 0.7% (by convolutional nets) and 1.4% (by basic Gaussian kernel SVM) reported by the original paper. Since the recognition of individual characters is so crucial, I thought it proper to hold a tender, so to say, and decided to pit two of the most well-known approaches - neural networks (NNs) and support vector machines (SVMs) - against each other. This choice was predicated on a requirement to obtain results as quickly as is reasonable, without having to reimplement tons of code, since while fundamental, character recognition is not the actual thrust of our hobby research; happily for us, proven public implementations of both exist: the Fast Artificial Neural Network (FANN) library, as well as LIBSVM, which has already made its appearance here. Before we begin, which do I expect to be better? Well, there is no easy answer (and I doubt any exists), but we can make some observations by drawing upon various sources:

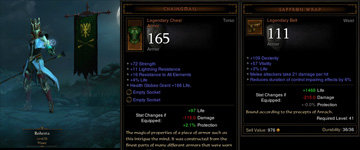

[*For FANN vs. LIBSVM; your mileage may vary] Without further ado, the results by Mr. Robo, LIBSVM first. Note that the parameters (C≈2.83, gamma≈0.00729) were taken from prior blogged research by Mueller, although I did confirm their plausibility through my own crude grid search:  From top to bottom, the graphs show the classification accuracy (most important), the total training and test times and the model file size for LIBSVM as a function of training set size, for three settings: the red lines indicate the results when the entire 28x28 characters are used, and the green lines when a 4-pixel empty border is cropped. The blue lines occur when instead of using the actual normalized greyscale pixel values, the characters are binarized to monochrome. First off, it can be noted that there is a small divergence in accuracy when the strictly-speaking information-less border is removed, which might be put down to the corresponding optimal parameters becoming slightly different; here, Mr. Robo apologizes for having to pick and choose which combinations to consider, which is frankly unavoidable. The 98.58% achieved does about replicate Mueller's findings, which in turn collaborate LeCun's 1998 ones. Moving on, both training and testing times seem to scale linearly with the amount of data (the respective graphs are in log scale on both axes). Incorporating the entire MNIST training set of 60000 items takes about 12 minutes tops on my run-of-the-mill desktop, which I suppose is very serviceable; the corresponding test speed is about 50 characters per second, again probably acceptable for now. The model file is however some 111MB large (about 20MB, compressed), which while unwieldy is not an obstacle to deployment. LIBSVM can be configured to produce probability estimates (like neural networks do inherently), which happens to be important for our purposes - the following is an illustration of how these estimates vary for different types of predictions:  It is observed that when the prediction is correct, the estimate tends to be very high - 0.95 and above for 90% of such guesses (in the above graph, it is 99.996% sure for the character "1"). On the other hand, when the prediction turns out to be wrong, LIBSVM knows to be less certain, with 70% of such guesses having a confidence of 0.8 or below. As an example, it is 44.8% sure that the "2" is actually a "3", instead of actually being a "2" (42.5% sure). There are certainly bad assertions, such as being 95.0% sure that the "0" is a "7", though this may be understandable for this case. Just for completeness, the grid search visualization for LIBSVM (brighter is better, full dataset rightmost):  On to the challenger, the FANN neural network:  The above results were obtained with a single hidden layer of 300 nodes, with accuracy reaching a maximum of 94.24%, not that far off LeCun's claimed 95.3% for this configuration. The training time of some two days was however something of a downer, although I suppose it could have been shorter had proper error thresholds been known and set beforehand. Unfortunately, neural networks seem to have no practical guarantees on convergence, as an experiment on a training subset achieved a mean square error of 0.01774 after some 110000 epochs and 0.01457 at some 180000 epochs, but went back to about 0.04 as the 500000 epoch limit approached. That said, I am a novice at FANN and neural networks in general, but at this point it is hard to motivate using neural networks for the next stage. The model files are quite a bit smaller (~2.8MB), but that is scant consolation. Note that investigations on tuned single hidden-layer neural networks (building on the local invention of extreme learning machines, which as far as I can tell trains much more quickly than normal neural networks, by fixing the weights - naturally, one expects some form of sacrifice in general for this advantage) just last year have yielded a 1.5% error rate with 2048 hidden units and about 3% error with 256 hidden units, which while somewhat better than the 5.76% error of our admittedly naive attempt, is still not particularly notable especially when compared to the LIBSVM offerings. It should be kept in mind that (rather more complex) neural network setups are enjoying something of a renaissance, with convolutional (custom-structured), committee (ensemble?) voting and deep multi-layer networks shown to produce results superior to SVMs and other techniques for some problems. However, we shall not consider these as yet, as improvements of that degree are not critical to our explorations; if required, they can be swapped in later to replace our existing character recognition module. While certainly possible for him, Mr. Robo has declined to apply KNN to MNIST (perhaps a snub to Mr. Ham), trusting instead in given results of some 95% for a basic Euclidean (per-pixel nearest distance) implementation, up to above 99% with preprocessing (covered above). His decision is further justified by KNN being generally having poorer performance than SVMs anyway, and perhaps more importantly are slow in usage, as the training set is the model; interestingly, RBF neural networks share commonalities with KNN (which is actually just extended template matching, come to think of it) More Doings It turns out that there's more from Mr. Robo! Consider that the MNIST dataset, while comprehensive, is in the end still a handwritten collection of digits, while the Google (and many other) CAPTCHAs involve the 26 letters, possibly from multiple distinct fonts. It would therefore be remiss for him not to take all of this into consideration. As such, Mr. Robo went to the trouble to (again) cook up his own data. To begin with, he selected ten extremely common web-safe fonts as a baseline, and then went on to find ten normal-looking (of the sort that people wouldn't mind reading for long periods) Google web fonts. Finally, as a control, he found ten decorative fonts to round it all up. The entire collection follows:  Top row: Web-safe Middle row: Google Bottom row: Decorative [N.B. Many more examples from Douglas Hofstadter's Metamagical Themas] Mr. Robo then first began by attempting to classify the trusty Times New Roman (undistorted, at a size of 21x21 pixels) by LIBSVM. Unsurprisingly, he achieved 100% classification accuracy on the same. [N.B. when the -b flag is enabled in LIBSVM to enable probability estimates, the training data has to be duplicated for classification to work - a (maximum) estimate of some 0.107 is then achieved. This can be increased to about 0.138 by tripling the data, and probably even further by repeated copying, as a consequence of how the estimates are computed; while it was noted that SVM does relatively well with little data, the results still tend to be poor when the number of features is much greater than the number of training samples, which applies here] Classification accuracy however falls to 72.2% when this very basic model is applied to the nine other web-safe fonts. Now, at this juncture, Mr. Robo asked himself two questions: Firstly (while pulling on a toga to honour Plato), what is the true form of a character, and can it be usefully described? Secondly, how much information is actually required for classification? After some discussion and overconsumption of wine and huffing of fig leaves, a high Mr. Robo determined that while the true form is ineffable and sublime, man, an attempt to approach it might be made by skeletonizing the font and dilating to a uniform stroke thickness to reduce variability, give me another hit of that. The outcome is shown below:  This observation was then further quantified by computing the total pixel difference between each font and the overall mean, for each letter. As seen below, the difference is reduced somewhat (~12%) for the web-safe and Google font classes. Mr. Robo then generated a larger 2600-item Times New Roman training set by adding rotations and distortions, and got 81.19% accuracy on the other nine fonts without this skele-dilate procedure, and 88.89% with. Therefore, unless otherwise noted, it will be employed from this point onwards.  What then is the required amount of information, i.e. how large does the character image have to be, to produce good classification results? To answer this, Mr. Robo varied the size of the character images from 1 pixel to 31 pixels square, and tabulated the accuracies. Not unexpectedly, 1x1 and 3x3 are just too small to predict anything much better than random chance. However, there is also no significant improvement once the size hits about 15x15. For some reason, 19x19 produced the best results (perhaps due to the fixed width of the skele-dilate procedure?), and was chosen to proceed with.  Next was to try and create a practical omni-font model by not including just a single font, but many of them. To this end, Mr. Robo tried a leave-one-out method on the web-safe fonts, each time picking one font as the test set, and using the remaining nine fonts to train a model of size 23400 (with 100 variations of each of the 26 letters for each font). A test set of size 2600 was also produced using the same range of deformations. Encouragingly, the results were not too bad, with an average classification accuracy of 94.06% for all ten fonts, 96.67% if Impact, which got only an understandable 70.54% given how different it looks from the others, is excluded. In this vein, the over 99.5% accuracies for Tahoma and Verdana might be explained by how alike they are (which means that when they are being tested on, there is nearly bound to be something very similar-looking already in the trained model) When all ten web-safe fonts were used to train a model and the model applied to the ten Google fonts, a classification accuracy of 95.85% is achieved. For the decorative fonts, the accuracy was pretty poor at 38.58%, but this is only to be expected given how distinct their appearances tend to be. These findings are summarized below:  Just for fun, Mr. Robo also ran the generated set of 2600 distorted Times New Roman characters using vanilla untrained Tesseract-OCR (resized to 190x190, with the -psm 10 flag, and configured to the set of 26 valid characters); 1324 out of the 2600 (50.92%) were correctly recognized, which he supposes is fair given the circumstances. While Tesseract can be trained, Mr. Robo will not be trying that for now. Mr. Robo stopped here for a deserved break, affected by news that Google's massive artificial neural network brain prefers cat videos, which more seriously probably reinforces the big data paradigm (example application covered in computational photography). This may be a relief, coming as critical thinking's standing plummets further - someone, ham or machine, has to take up the slack. Happily, identifying when such assistance is appropriate may not be that hard. Mr. Ham: Ah, I see my research is coming along well. Mr. Robo: Your research?! Mr. Ham: Ok, our research. I am a magnanimous scholar, after all. On top of that, I levelled a new Diablo 3 Wizard to Level 53, and found two artifacts on the way:  That's Chiangmail and Saffron Wrap Mr. Robo: Can somebody please stop him from continually stealing my thunder? He's not even doing anything remotely practical! Mr. Ham: Ooh, not so fast, companies are already conducting interviews in Diablo 3! Mr. Robo: ... Mr. Ham: Don't feel so bad, I'm always here to give you some tips on building up some characters that matter, if you know what I mean. And oh, the Gods and Kings expansion for Civilization V came out. Guess that's why Mr. Human is more engaged than usual! Next: Getting Phoney

Trackback by lotto pro

|

||||||||||||||

Copyright © 2006-2026 GLYS. All Rights Reserved. |

||||||||||||||