|

| |

|

| |

|

|

|

|

TCHS 4O 2000 [4o's nonsense] alvinny [2] - csq - edchong jenming - joseph - law meepok - mingqi - pea pengkian [2] - qwergopot - woof xinghao - zhengyu HCJC 01S60 [understated sixzero] andy - edwin - jack jiaqi - peter - rex serena SAF 21SA khenghui - jiaming - jinrui [2] ritchie - vicknesh - zhenhao Others Lwei [2] - shaowei - website links - Alien Loves Predator BloggerSG Cute Overload! Cyanide and Happiness Daily Bunny Hamleto Hattrick Magic: The Gathering The Onion The Order of the Stick Perry Bible Fellowship PvP Online Soccernet Sluggy Freelance The Students' Sketchpad Talk Rock Talking Cock.com Tom the Dancing Bug Wikipedia Wulffmorgenthaler |

|

bert's blog v1.21 Powered by glolg Programmed with Perl 5.6.1 on Apache/1.3.27 (Red Hat Linux) best viewed at 1024 x 768 resolution on Internet Explorer 6.0+ or Mozilla Firefox 1.5+ entry views: 134 today's page views: 38 (4 mobile) all-time page views: 3084029 most viewed entry: 18739 views most commented entry: 14 comments number of entries: 1183 page created Fri Apr 26, 2024 03:15:04 |

|

- tagcloud - academics [70] art [8] changelog [49] current events [36] cute stuff [12] gaming [11] music [8] outings [16] philosophy [10] poetry [4] programming [15] rants [5] reviews [8] sport [37] travel [19] work [3] miscellaneous [75] |

|

- category tags - academics art changelog current events cute stuff gaming miscellaneous music outings philosophy poetry programming rants reviews sport travel work tags in total: 386 |

| ||

|

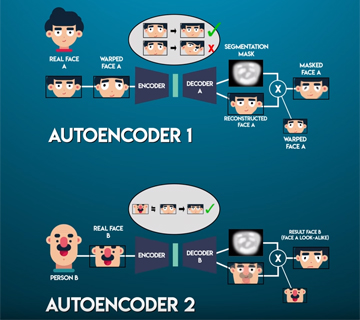

Dragonboated for the first time in what, sixteen years, for my unit's Cohesion Day - there's a reassuring simplicity to just pulling, in rhythm, over and over again. Returning to my usual life, I'll continue with the first of two overdue tech discussions: Deepfakes It was coming. Hey, netizens have been pulling humourous face-swapping photos for eons, first with Photoshop and then with apps (also: the ubiquitous SnapChat dogface filter), and there was Face2Face a couple of years back [paper], which admittedly focused purely on mouth gestures. How hard, then, could transplanting the eyes and nose be? As it turns out, not very. Some months ago, FakeApp was released, allowing seamless face transfer on videos. Expectedly, this has energized dabblers to put it to productive use, such as subbing Nicholas Cage in as every actor in a movie, but probably mostly to, ahem, more risqué ends. What manner of sorcery is this?! And yes, the technology. A brief comparison with the now-dated Face2Face mouth expression transfer tech: then, a similarity-based energy metric was used to retrieve the closest-seeming mouth appearance from a frame in the target (to be doctored) video, relative to the source gesture. And, actually, the DeepFake pipeline remains broadly the same: For each frame in the video,

So, what's an autoencoder? It can be thought of as a function consisting of two parts: an encoder that converts an input into another (usually compressed) representation, and a decoder after that that converts the representation back into the (perhaps slightly different) input. If it helps, compression can be thought of as a form of autoencoding: when you zip a file, the original binary data is converted (encoded) into a (hopefully smaller) representation, the zipped file; and when that zipped file is uncompressed (decoded), the original file is obtained. Furthering this intuition, image compression is however not quite autoencoding, for popular formats such as JPEG - it's more of discarding relatively unimportant data, such that the compressed representation remains directly interpretable as a (lower-quality version of the) image. Certainly, neural network based autoencoders (henceforth, just autoencoders) can be trained on image data, althought as the Keras tutorial notes, this is seldom worth it for purely compression purposes. The beauty of autoencoders lies instead in their flexibility - just throw them any (and enough) data, and they'll generally learn a decent representation for you. This property is cleverly exploited in the DeepFake setup, which utilizes a single encoder, and two decoders:  The heart of the system (Source: DeepFakes Explained video, at 6:30) Before we continue, a quick technical note: DeepFake is built on Google's TensorFlow library (which would have saved me rolling my own GPU code some years back), and the more user-friendly GUI FakeApp is a 1.8GB torrent download. The actual underlying scripts are however much more lightweight, and can be gotten from an unofficial GitHub repo. The autoencoder architecture can then be examined at plugins\Model_Original.py, with a low-memory variant Model_LowMem.py apparently the same except for the dimensionality of the dense (representation) layer of the encoder being halved, from 1024 to 512. Some user-added generative adversarial net scripts are also included.The slightly-surprising part here, is that there are no constraints applied on the dense layer, unlike for example in variational autoencoders; as such, it seems that faces from different people with the same expressions do naturally map to similar representations in the dense layer. In other words, if a photo of Person A with mouth open has a vector representation va in the trained encoder, Person B with the same expression would produce a vector vb ≈ va. Then again, since the basic structure of (aligned) faces are all but identical on major landmarks like the eyes, nose and mouth, perhaps this is not that unexpected. What remains is conceptually straightforward - with hundreds (prefably thousands or more) of images of both subjects (victims?), we train the shared encoder to produce the same dense representations, for the same expressions of each subject. Then, to morph Person B's face onto Person A's face in the original video, we detect Person A's face in each frame, and run it through the encoder before decoding it with the decoder for Person B. Recall that Decoder B is specialized to generate only Person B's faces - therefore, we'll get an image of Person B with the same expression as that of Person A, which we can then merge straight back into the video.  Perhaps the most famous example (Source: dailymail.co.uk) A pertinent point, then, is that the generated and overlaid face is not truly that of the target person. Strictly speaking, it is an indirect representation, akin to a sketch artist producing his own rendition of his sitter. This setup also allows the decoder to make up for missing data to an extent, by using its learned conceptual expression of a particular face, to "fill in" for missing data. And, despite some very impressive examples, creating good fake videos still takes some work. Firstly, a ton of images of both subjects are required, with insufficient images leading to bad outputs; this is however perhaps not that big of a problem for celebrities. Secondly, rarer profile poses may be an issue, as are close-ups, with the autoencoder apparently working on 64 pixel square inputs. This may explain why the successful examples tend to be on clips where the subject is some distance away, and mostly facing the camera straight-on. Lastly, unlike Face2Face for example, there doesn't seem to be flow constraints between frames. This may contribute to sudden strange "flashes", if a frame in the middle of a sequence has a less-compatible face generated for it. Of course, none of these weaknesses are insurmountable, especially for well-funded professional outfits (such as the now-exposed American Deep State). This has clear implications on video as evidence, particularly combined with voice synthesis - perhaps even by the same encoder-decoder mechanism - that would allow convincing evidence to be produced of any public figure saying and doing just about anything. Very fortunately, interest thus far has been mainly restricted to naughty vids, which has led to the deepfakes subreddit being banned (go to r/fakeapp instead), together with the vids themselves on major platforms - but, let's be honest, bans have never worked... [To be continued with machine translation...] Next: Doublespeak

|

|||||||

Copyright © 2006-2024 GLYS. All Rights Reserved. |

|||||||