|

| |

|

| |

|

|

|

|

TCHS 4O 2000 [4o's nonsense] alvinny [2] - csq - edchong jenming - joseph - law meepok - mingqi - pea pengkian [2] - qwergopot - woof xinghao - zhengyu HCJC 01S60 [understated sixzero] andy - edwin - jack jiaqi - peter - rex serena SAF 21SA khenghui - jiaming - jinrui [2] ritchie - vicknesh - zhenhao Others Lwei [2] - shaowei - website links - Alien Loves Predator BloggerSG Cute Overload! Cyanide and Happiness Daily Bunny Hamleto Hattrick Magic: The Gathering The Onion The Order of the Stick Perry Bible Fellowship PvP Online Soccernet Sluggy Freelance The Students' Sketchpad Talk Rock Talking Cock.com Tom the Dancing Bug Wikipedia Wulffmorgenthaler |

|

bert's blog v1.21 Powered by glolg Programmed with Perl 5.6.1 on Apache/1.3.27 (Red Hat Linux) best viewed at 1024 x 768 resolution on Internet Explorer 6.0+ or Mozilla Firefox 1.5+ entry views: 2632 today's page views: 644 (23 mobile) all-time page views: 3732688 most viewed entry: 18739 views most commented entry: 14 comments number of entries: 1256 page created Fri Mar 6, 2026 13:54:54 |

|

- tagcloud - academics [70] art [8] changelog [49] current events [36] cute stuff [12] gaming [11] music [8] outings [16] philosophy [10] poetry [4] programming [15] rants [5] reviews [8] sport [37] travel [19] work [3] miscellaneous [75] |

|

- category tags - academics art changelog current events cute stuff gaming miscellaneous music outings philosophy poetry programming rants reviews sport travel work tags in total: 386 |

| ||

|

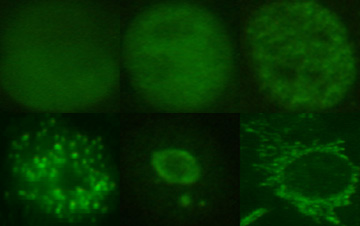

- academics - There's a fair load on my plate these days, so I'll be terse (as much as I can be) with the short review of academia that follows, which may hopefully be of interest to (prospective) graduate students. No warranty implied on any advice. Did squeeze in another DotA session, in which I reacquainted myself with Puck, Visage, Tauren Chieftain, Tidehunter, Naga Siren and Chechen (formerly just Chen) in that order. There appears to have been a shift towards activated skills while I was away - Tidehunter's Anchor Smash is now one (and reduces damage) instead of the old random cleave, while Naga Siren has lost her passive high-proc Critical Strike (which can get impressive with images) for Rip Tide, which looks like a Wave of Terror variant. In fact, I am halfway through downloading the DotA 2 Beta, courtesy of bim. It'll do nicely for my spare time until Diablo 3 comes out in a month. Seen On Biz (Comp?) Canteen Table  Go decode it yourselves If I could set exam questions, these might be on the list: Q1(a). What is this square thingy called? (1 mark) Q1(b). How much information can be conveyed by that thingy? Assume that alphanumeric characters, the comma and period comprise the character set. (2 marks) Q1(c). What are the smaller square thingys at each corner for? (1 mark) Q1(d). Describe an algorithm to extract data from the thingy in the image above, using the available commands READPIXEL(image,x,y) to get the RGB value of the pixel at image(x,y), SUBIMAGE(image,subimage,x1,y1,x2,y2) to extract a subimage from an image, and SETPIXEL(image,x,y,R,G,B) to set the value of a pixel. (6 marks) Q1(e). Describe two ways how error-checking might be incorporated into the thingy. (2 marks) Q1(f). Due to budget cutbacks, all you have left is a mechanical thingy that can sweep each row of the square thingy accurately, but can only detect changes in intensity (i.e. white changing to black, or vice versa). Explain how this affects the information capacity of the square thingy. (3 marks) Alternative Use Of Spare Time Why not automatically classify some cells? Masked images of individual cells (which makes the process so much more convenient) are supplied, and the target then is to predict which one of six classes that a cell belongs to.  How hard can this be, right? [N.B. Each of the six (rescaled) cells shown belong to a different class. Clockwise from top left: homogeneous, fine speckled, coarse speckled, cytoplasmatic, nucleolar, centromere] This is one of those problems which can get infuriating - it's so obvious to a human, why can't a computer do it well? Curious as to how a simple solution rigged up over the weekend might perform, I basically got the computer to describe the characteristics of large bright spots and small bright spots within each image, together with some crude measure of how "rough" the cell texture is. Having produced some sixteen features as a descriptor of each cell, I then split the full set of 721 images into halves, one half of which was used to train a support vector machine (using the extremely useful LIBSVM, and grid search to get good parameters), and the other half used to test the model produced. [N.B. No need to worry if one does not know exactly what a support vector machine is (I don't either) - it doesn't detract from its usefulness as a black box] Since I couldn't be certain whether results obtained as such were dependable, I repeated the entire procedure for a total of ten times (with different randomly generated training/test splits each time) with the found parameters. As it turns out, this basic method appeared to be able to correctly classify a cell an average of about 88% of the time (minimum for a split: 85.3%, maximum: 90.3%) on the ten instances (and some 93% if the entire dataset is tested on), which should hopefully be close to the results on the held-out real test set, if the selection process isn't biased. Barring (not totally unexpected) procedural errors, of course. Is this good? Well, for a baseline, completely random guessing would classify a cell correctly up to 30% of the time (since the largest class has some 200-odd members). A cursory search for past research on Google Scholar sees claims of about 75% (supposedly close to a human expert!) on what seems like a similar experiment, though certainly no direct comparism can be made without a common dataset, and in any case that paper is slightly dated (hailing from 2002). Others found gave accuracies in the range of 75% to 85%, so the above findings shouldn't be too embarrassingly shabby. Either way, it can't be tougher than the PhD Challenge (courtesy occ) However, one thing that has to be remembered in automated medical applications is that constant monitoring should be the norm - if some subtle adjustment in image characteristics, say something like dirt on the lens or a new bulb, throws off the functioning of the system, it needs to be caught as soon as possible (well, perhaps unless some generous margin of error was acceptable in the first place); operating system companies can get it wrong every other day and receive nothing much more serious than boycott threats, but not so operating tables. And On To Academia More disclosure first: I won't be gunning for an academic job straight off (even if they would have me, a huge if) - having spent so many years at university, my instincts are yelling at me to get away from it and seek out a new environment for some time, and they haven't let me down too often. And then there's that little matter of actually getting out with that one more piece of paper... But, some might say, what is the point of academia? Doubtless many (and even some undergraduates) think that it's about teaching the undergraduates, but they may be surprised. While not completely unimportant, and supposedly greatly enjoyed by or even the major motivation for certain professors, it does not count for that much at most universities to the best of my knowledge, the occasional token teaching award aside. There's always the bell curve to fix things on that end, after all. No, the currency of colleges is research. [N.B. It could be wondered what proficiency at research has to do with being a good teacher who has some inkling of pedagogy, or at the very least an intelligible accent. The frank answer is, extremely little.] Now, currencies need a unit, and that unit, in research, is papers. If you imagine each paper written by an academic and published in some journal or conference as a dollar note, legal tender only in universities, you would not be too far off reality.  The distinction kind of blurs after awhile Certainly, not all papers are created equal; there are groundbreaking papers - think of them as thousand-dollar bills - and then there are the far more common ho-hum incremental improvements - comparable to spare change. But how can we judge which is which, since a paper's importance, especially in an area one is not familiar with, is difficult to tell just by reading it? The usual answer is citation count. Almost every academic paper references others, and very conveniently papers that are referenced often by others are generally important (which may be a circular definition). Therefore, if we are presented with two papers of the same age in the same field, and one has 4348 citations and the other 3, it should be safe to say that the first is the more influential paper. It follows naturally that authors of influential papers are better, at least to a faculty committee making a decision on tenure. Now, as (aspiring) professors tend to be fairly bright, it has not escaped them that amassing citations is A Good Thing. This may however have led to a publish or perish culture, in which relatively minor findings are presented in their own papers, generally well worth it since no matter how epic a work is, it can only be cited once; and if the authors feel like rewarding themselves, they can cite their own past papers! (Yes, it's perfectly acceptable if those papers are relevant, which since they were likely along the same theme anyway, they should often be. This should, of course, not be overdone) Other than the obvious total citation count, another very popular metric is the h-index, which represents the number of an author's works that have been cited at least that many times (fittingly, the original paper introducing the index has been cited over 2000 times in seven years) Some issues about this system do spring to mind. First off, as I agonized over in my first submission, there is in practice limited space to list references (I could only find space for 13, even with judicious et al-ing), and even a brief survey usually throws up dozens, if not hundreds, of possibly relevant papers. The final cut can tend to be somewhat arbitrary, and some snowball effect of citing already popular papers (it should be safe, right?) possibly accumulates - note that some academics supposedly take not being cited harder than others. Public-service review papers are generally a good bet for scoring citations, but again can be too much of a good thing... [To be continued...] Next: Eye To Eye

|

|||||||

Copyright © 2006-2026 GLYS. All Rights Reserved. |

|||||||