|

| |

|

| |

|

|

|

|

TCHS 4O 2000 [4o's nonsense] alvinny [2] - csq - edchong jenming - joseph - law meepok - mingqi - pea pengkian [2] - qwergopot - woof xinghao - zhengyu HCJC 01S60 [understated sixzero] andy - edwin - jack jiaqi - peter - rex serena SAF 21SA khenghui - jiaming - jinrui [2] ritchie - vicknesh - zhenhao Others Lwei [2] - shaowei - website links - Alien Loves Predator BloggerSG Cute Overload! Cyanide and Happiness Daily Bunny Hamleto Hattrick Magic: The Gathering The Onion The Order of the Stick Perry Bible Fellowship PvP Online Soccernet Sluggy Freelance The Students' Sketchpad Talk Rock Talking Cock.com Tom the Dancing Bug Wikipedia Wulffmorgenthaler |

|

bert's blog v1.21 Powered by glolg Programmed with Perl 5.6.1 on Apache/1.3.27 (Red Hat Linux) best viewed at 1024 x 768 resolution on Internet Explorer 6.0+ or Mozilla Firefox 1.5+ entry views: 315 today's page views: 406 (15 mobile) all-time page views: 3386447 most viewed entry: 18739 views most commented entry: 14 comments number of entries: 1226 page created Fri Jun 20, 2025 09:18:19 |

|

- tagcloud - academics [70] art [8] changelog [49] current events [36] cute stuff [12] gaming [11] music [8] outings [16] philosophy [10] poetry [4] programming [15] rants [5] reviews [8] sport [37] travel [19] work [3] miscellaneous [75] |

|

- category tags - academics art changelog current events cute stuff gaming miscellaneous music outings philosophy poetry programming rants reviews sport travel work tags in total: 386 |

| ||

|

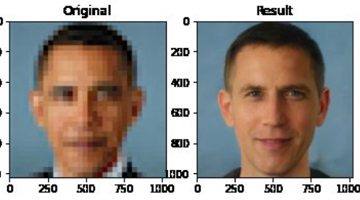

Christmas is over, and it's time to delve into the NeurIPS coverage that had been promised; before burrowing into the technical aspects, though, it might be noted that the top-tier machine learning conference has committed to quite a number of progressive accomodations in recent years. There was the changing of its official acronym from NIPS in 2018 due to its confluence with an innocent body part, to begin with, and the opening talk by Charles Isbell calling for more engagement with other fields (and accompanied by a sign language translator). Not that these moves have been entirely universally acclaimed - there's the ongoing mumbling on whether "Latinx" makes sense given how "Latino" is already gender neutral (but yeah, "X"'s cool, like in the Staying on this track, the discontent in Google's ethical A.I. group has only increased in recent days, after their co-leader Timnit Gebru - who had been heavily involved in the conference's diversity efforts - had been fired a few weeks back. The proximate cause appears to be over the treatment of a research paper, which the Google higher-ups quashed due to them not being given the mandatory two weeks' notice for pre-publication internal review. As such, her bosses demanded that the submitted-and-accepted paper be retracted, to which Gebru responded with an ultimatum, that supposedly included identifying the reviewers. Google declined, and instead chose to make the separation immediate - which, it should be said, is not an uncommon practice with disgruntled employees. The paper itself, as summarized from a preprint supplied by a computational linguistics coauthor, appears a critique of the monolithic language models that Google has been championing (e.g. BERT, the best paper winner at last year's NAACL). In particular, Google's Head of A.I. Jeff Dean stated that it didn't meet their bar for publication, due to omitting too much of the relevant research, which I suppose is their prerogative. In this case, it's difficult to judge the involved parties given the lack of details leading to the firing, but this wasn't the first time that Gebru had rubbed machine learning icons the wrong way. Facebook's Yann LeCun quit Twitter in June, recall, after an exchange with Gebru over the medium (which is probably unsuitable for thoughtful discourse in the first place) degenerated into unproductive mutual bashing between segments of the A.I. and activist communities. June's flare-up began with a tweet on Duke's new PULSE A.I. photo recreation model, which generates a high-resolution face from a low-resolution (pixelated) image. To be frank, it wasn't exceedingly exciting in a technical sense, since the low-to-high resolution generative functionality appears largely akin to NVIDIA's celebrated Progressive GAN formulation, from 2017. The general upsampling/superresolution problem has been around for a long time, but as usual, deep learning has performed particularly well on it. The technology has by the way already made it into the consumer world in some form, with Samsung's 8K QLED televisions for instance touting the "A.I. Quantum Processor 8K" (which as far as I could tell, has nothing to do with quantum computing, but whatever sells...). Sounds innocous enough, until it was found that a photo of Obama was enhanced by the model into a f**king white male:  Uh-oh... (Source: twitter.com) This, it seems, had an associate prof from Penn State comment on the dangers of bias in A.I., to which LeCun (IMHO, not unreasonably) stated that the bias towards phenotypical whiteness was due to the training dataset used, which contained mostly whites, and that the same model trained on, say, a Senegalese dataset would generate African faces. Here, Gebru interjected that she was sick of this framing, and that harms caused by machine learning couldn't just be reduced to dataset bias, the ensuing tribal acrimony of which had LeCun bail from the Twitter platform within the fortnight. There has been quite a bit of commentary on the saga, that I think might not have been articulated as well as it could have been. My own take is that this specific face-recreation task is an unwinnable Kobayashi Maru - by its nature, upsampling from lower to higher resolutions is a one-to-many function, i.e. for every low-resolution photo, there are (extremely) many high-resolution photos that correspond to it; one might also think of it as an underdetermined system with a large set of solutions. All the model can do, is to present one of these many plausible answers that fit. Much of the outrage, one gathers, arose from a prominent Black figure (Obama) being "whitened", especially as the photo used in this demonstration appears to be his iconic official portrait - and it has been attested that humans are particularly good at recognizing blurred versions of famous faces. Of course, as LeCun was probably trying to say, the model has no such cultural knowledge, and intends no marginalization. If one were to try and look on the positive side, Diogenes' quote to Alexander on the bones of his father might be apropos here - at some basic level, we are all very similar. The point, however, is that incorrect facial upsampling seems unfixable in general; one can no more guarantee a correct restoration, any more than one can guarantee solving an underdetermined system. One could, I suppose, mitigate representational bias by resampling - which has seen some recent contributions - but these do not change the fundamentals, namely that one cannot assure lossless recovery (which would otherwise be obtaining data compression for free). In any case, one suspects that tweaking the input tone (as done for other Presidents), might have given the intended effect - as perhaps a suntanned and very white Jersey Shore bro, might well be reimagined as a Latino instead. And - whisper it softly - isn't Obama genetically as White as he is Black anyhow?  Same face, different race, in each column (Source: neurohive.io) Anyway, because I dislike leaving conflicts hanging without some attempt at resolution, the obvious solution to the facial photo recreation controversy is... simply allow the user to choose. One merely has to mine the appropriate latent space direction for the desired feature (race or skin colour, in this case), which has already been developed for StyleGAN for some years. These features could then be controlled with sliders, as in the character creation process in many computer games. Then, when the Black in A.I. group arrives for the demo, the team-mate hiding under the table would simply twist the appropriate knobs, ditto for the Korean group following them, et cetera. Straightforward, tio bo? Problem solved in this case, I hope. There's something about faces that gets hackles rising in A.I., and one supposes Gebru did have a case in accusing current face recognition models of being less accurate on the darker-skinned - although given the concern over such models being used to profile minorities - and possibly inappropriately for criminality, homosexuality, etc - in other places, one could consider it a hidden blessing in some circumstances. Lest it be forgotten, facial recognition had been proposed to replace EZ-Link cards in Singapore, as far back as 2016, which might give an inkling as to how advanced the relevant technology had already been. Automatic machine-gunners are under development here too, and to be honest, if Google etc. won't do it, others will, as evidenced by Turkey's recently-deployed gunner drones, and Mr. Ham six years ago this day in a way. The worry over uninterpretable black-box algos screwing vulnerable persons over is well-founded, granted, which is why I support continued reasoned discourse over such matters, instead of the seeming eagerness to skewer attempts at debate from the more-woke set (or worse, attempts at social shunning, recalling the religious control methods and purity tests that I personally detest). Returning to the face generation task, it has been reported that the StyleGAN generator produces some 72.6% of its faces as White, 13.8% as Asian, 10.1% as Black and 3.4% as Indian - which, it seems, is roughly the actual demographic distribution for America (with Asians slightly oversampled, and Blacks slightly undersampled). I gather it fair, then, to inquire as to what would be regarded as an acceptably-diverse distribution; 25% for each of White, Asian, Black & Indian? Does that really make sense? And what if subgroups within these broad divisions (e.g. Chinese/Japanese/Korean etc. for Asian) refuse to be considered under the same umbrella? Do they then each get equal weighing with the rest of the groups? Common values should be celebrated! [N.B. Up to a very wide latitude of forebearance] [N.N.B. For more cosy inter-religious slice-of-life, see Saint Young Men] Next: End-of-Year Thoughts

|

|||||||

Copyright © 2006-2025 GLYS. All Rights Reserved. |

|||||||